IoT Data Analysis

Classical data analysis project focused on data quality assessment and validation of an automated irrigation algorithm.

Precision agriculture promises to optimize water usage, but in real-world deployments data quality remains the biggest bottleneck. This project, conducted within a European Agricultural testing framework, focused on validating a commercial IoT solution for vineyards against scientific reference instruments.

Objectives

- assess the reliability of a new IoT sensor setup measuring soil water potential

- evaluate the accuracy of an automated irrigation decision algorithm fed by those sensors

My role

- Designed the full data analysis pipeline

- Implemented anomaly detection and cleaning logic

- Reconstructed and stress-tested the irrigation algorithm

- Translated results into actionable recommendations for stakeholders

Tech Stack

| Language | Python 3.11+ |

|---|---|

| Analysis | Pandas, NumPy, SciPy, Statsmodels |

| Viz | Matplotlib, Seaborn |

| DevOps | uv (package manager), Jupyter |

Repository Structure

The code is organized to ensure reproducibility:

01_preprocessingAPI fetch & cleaning02_data_analysisCorrelation & sensor lag03_water_effectsRain/Irrigation impact04_irrigation_suggestionsAlgorithm validation

The Challenge: Data in the Wild

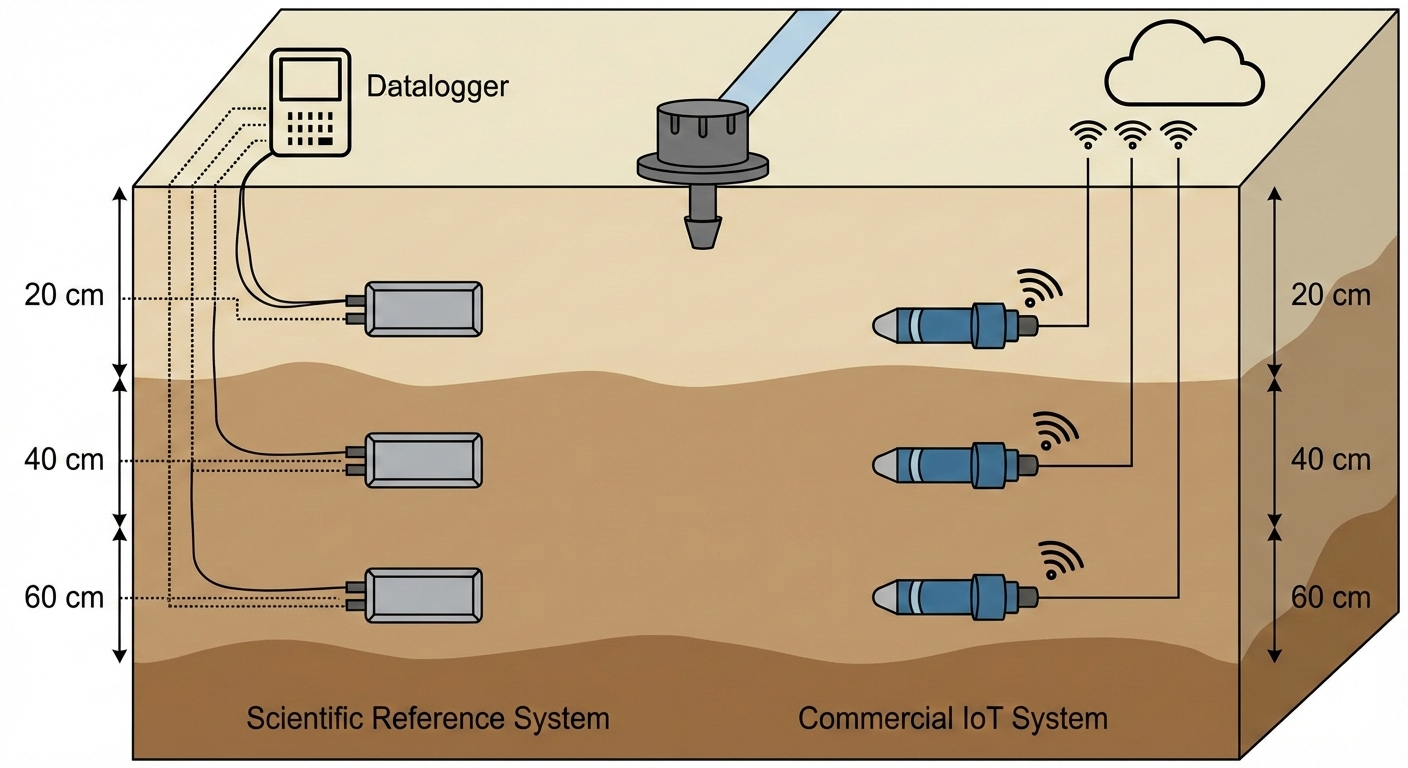

Real-world sensor data is rarely clean. The dataset consisted of time-series measurements from soil water potential sensors installed at different depths and at varying distances from irrigation drippers in a vineyard located in Northern Italy.

Two hardware systems were compared:

- Commercial IoT System: The solution under test, streaming data to a cloud-based API.

- Scientific Reference System: High-precision suction tensiometers used as ground truth.

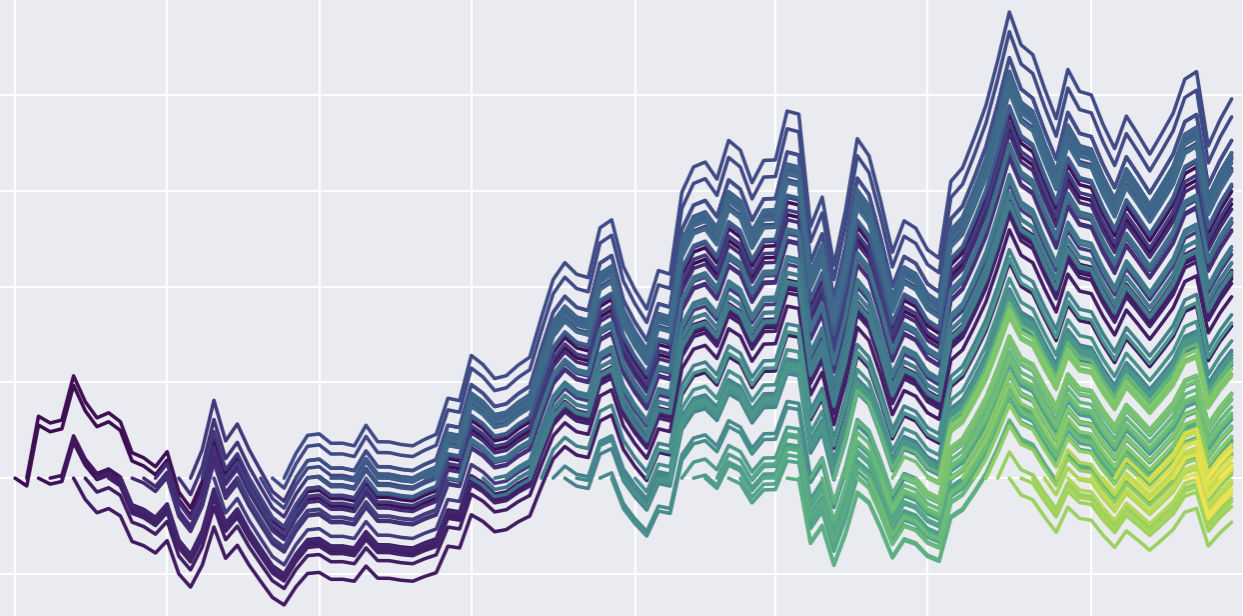

Initial exploratory data analysis revealed several critical issues affecting the commercial sensors, including missing values, prolonged constant readings (“stuck” signals), excessive noise, and outliers incompatible with biological plant survival (values exceeding the Permanent Wilting Point).

Methodology & Code

The analysis pipeline was built using a modular approach:

- Data Ingestion: Automated fetching from the partner’s API using secure authentication.

- Preprocessing: Data were aggregated at an hourly resolution. A custom anomaly-detection logic was implemented to flag anomalies of different types.

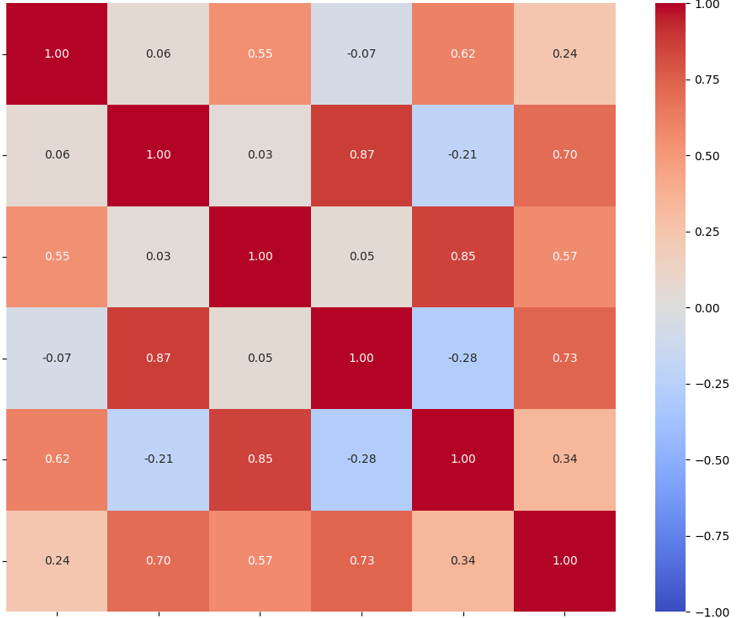

- Correlation Analysis: Pearson correlation and lag analysis were used to quantify agreement and response delays between commercial sensors and scientific reference instruments.

- Water Effects: Sensor behavior was evaluated during irrigation and rainfall events to assess physical responsiveness.

Results: Algorithmic vs. Human Logic

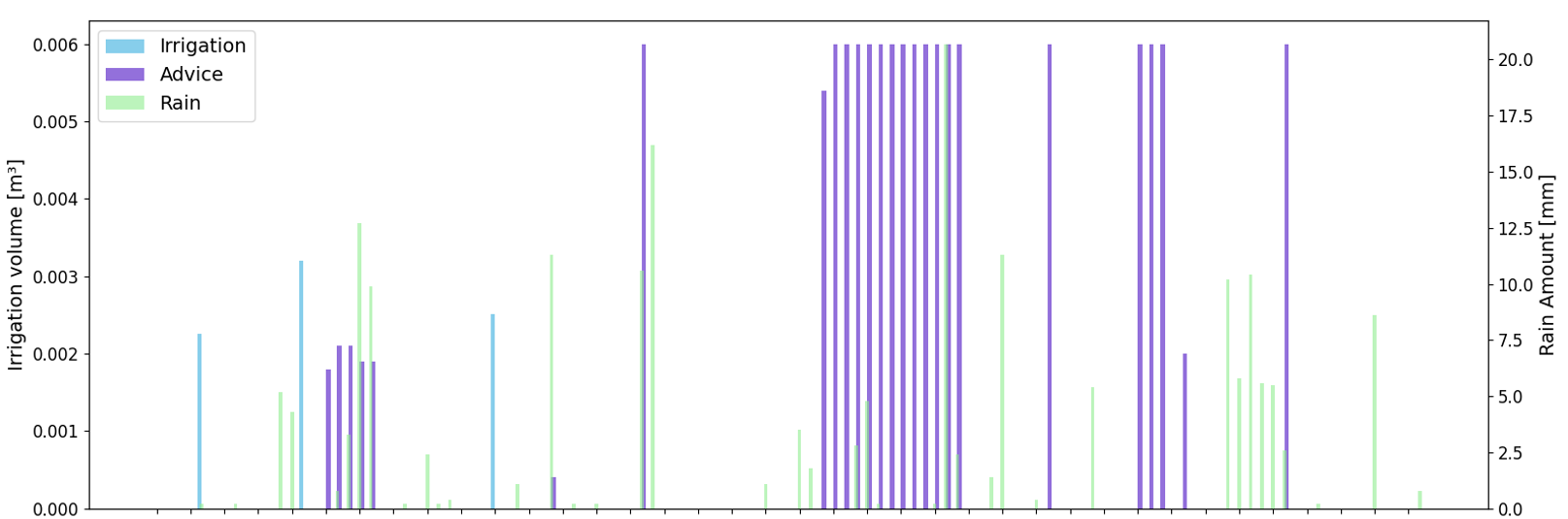

The most critical findings emerged when simulating the irrigation recommendations produced by the commercial system.

The algorithm relied on a matrix-based aggregation of sensor inputs to trigger irrigation events.

By reconstructing an entire growing season, a >10× discrepancy was identified between algorithmic recommendations and actual field requirements:

- Algorithmic Suggestions: several irrigation events

- Real Field Practice: only a few targeted interventions

The analysis demonstrated that the algorithm was reacting primarily to sensor noise rather than genuine plant water stress.

Without an explicit data quality layer, erroneous signals were systematically amplified into incorrect decision-making.

Note: To maintain confidentiality, all company names, locations, dates, and specific proprietary values have been anonymized or modified. The analysis focuses on the technical methodology and challenges encountered during the project.