PRISMA Review with LLM & RAG

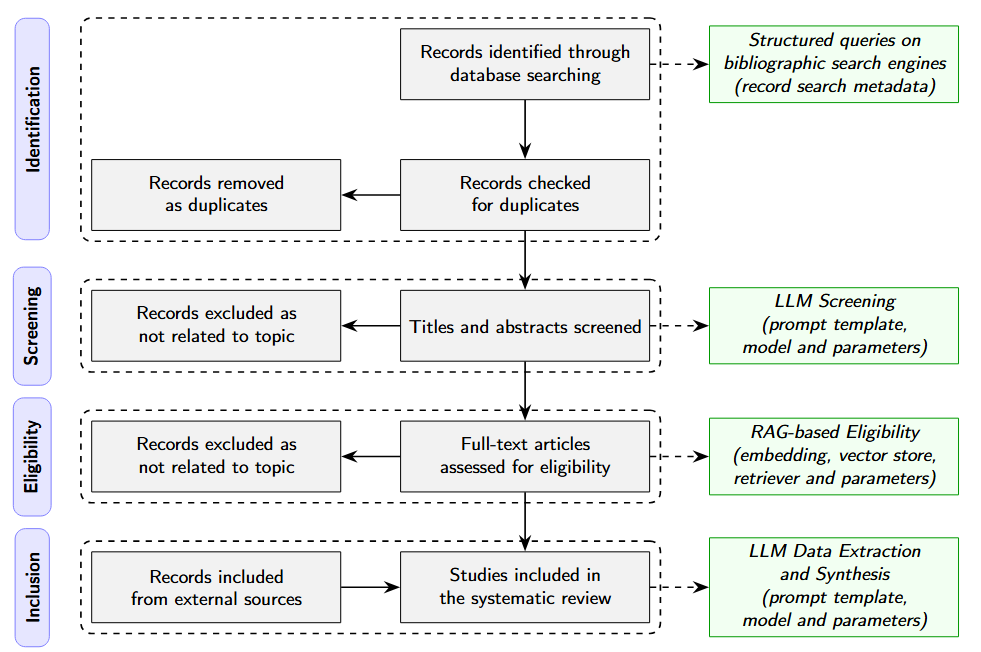

Research framework designed to automate and standardize the systematic literature review process according to PRISMA 2020.

Automating PRISMA is an innovative framework aimed at simplifying and accelerating systematic literature reviews, strictly aligning with PRISMA 2020 standards. By integrating Large Language Models (LLMs), specifically Google Gemini, and Retrieval-Augmented Generation (RAG) systems, the project automates the most time-consuming phases of bibliographic research.

Objectives

- Standardize the identification and screening process of scientific contributions.

- Reduce human error and subjective variability in eligibility analysis.

- Accelerate the screening phases of titles, abstracts, and full texts while maintaining high methodological rigor.

My Role

- Design of the framework architecture and automation pipeline.

- Implementation of the LLM-based screening logic (Gemini 2.5 Flash/Pro).

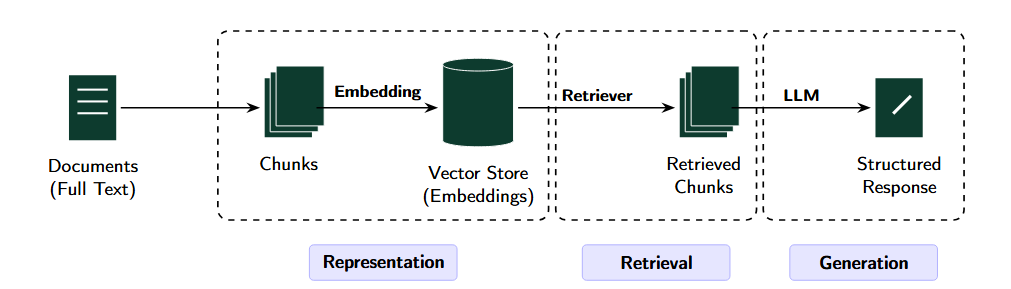

- Development of the RAG system for in-depth PDF analysis and inclusion criteria verification.

- Validation of automated results against “human-verified” reference datasets.

Tech Stack

| Language | Python 3.11+ |

|---|---|

| AI/LLM | Google Gemini (Generative AI), LangChain |

| Vector DB | FAISS (Facebook AI Similarity Search) |

| DevOps | uv (package manager), Jupyter, python-dotenv |

Repository Structure

The organization follows the PRISMA workflow phases:

dataBibliographic input data and intermediate files for all phases01_identification_screeningIdentification and screening phases02_screening_evaluationComparison of results with the reference study for the first two PRISMA phases03_eligibility_RAGFull-text analysis via RAGreplicated_study_workflowComplete end-to-end workflow for replicating the reference study

The Challenge: Efficiency and Rigor in Systematic Review

Systematic reviews require the screening of thousands of articles, a process often prone to human fatigue and bias. The main challenge lies in maintaining the traceability and reproducibility required by PRISMA standards while leveraging the speed of artificial intelligence.

The framework addresses three critical phases:

- Identification: Management of bibliographic exports from Scopus, IEEE, and WoS.

- Screening: Rapid filtering based on deterministic criteria applied to abstracts.

- Eligibility: Complex analysis of full-text manuscripts in PDF format.

Methodology and Implementation

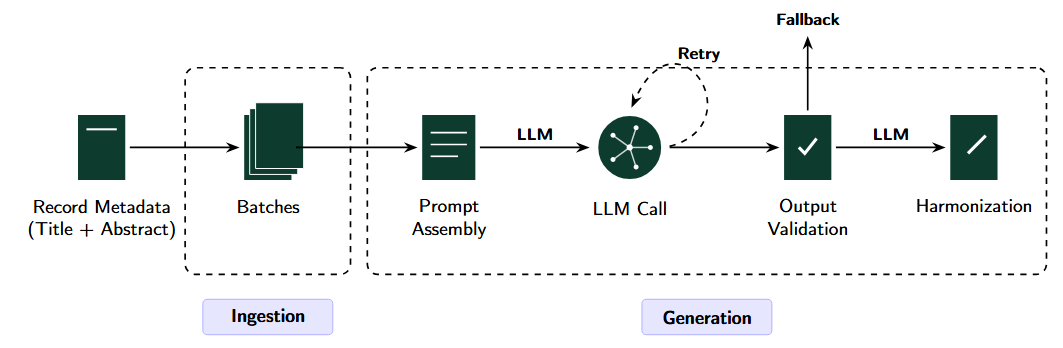

The pipeline was built with a modular and “human-in-the-loop” approach:

- Data Ingestion: Processing of CSV/XLS files from various bibliographic databases.

- Automated Screening: Using Gemini to evaluate adherence to inclusion/exclusion criteria based on titles and abstracts.

- RAG-powered Eligibility: For selected papers, the system builds a local vector store (FAISS). Documents are segmented, encoded as embeddings, and queried to verify specific criteria within the full text.

- Harmonization: Automatic extraction of study attributes into a consistent schema for final reporting.

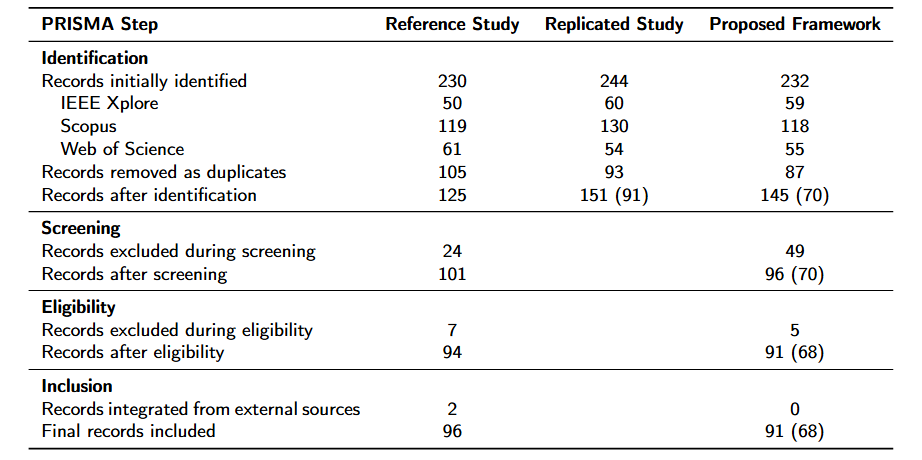

Results: Transparency and Reproducibility

The application of the framework demonstrated a significant reduction in screening times. The use of structured prompts and deterministic logic ensures that every decision is justified and traceable, reducing the variability typical of human reviewers.

Key Results:

- Time Efficiency: Drastic reduction in the time required for reading and classifying papers.

- Accuracy: High consistency with manual validations, with fallback mechanisms for uncertain cases requiring human.

- Standardization: Automatic generation of harmonized metadata ready for final synthesis.

Note: To maintain confidentiality, all company names, locations, dates, and specific proprietary values have been anonymized or modified. The analysis focuses on the technical methodology and challenges encountered during the project.